How Does It Work?

The main simplification we made is calling gradient boosting a neural network. Since there is a clear familiarity with neural networks in society, we decided not to be pedantic in explaining what boosting is, as no one cares.

So, we used the beloved Catboost from Yandex. Because it works fast and is easy to learn.

It works like this: first, we build a simple model that can make some predictions. Then we look at where this model makes mistakes and add another model that corrects the errors of the first model. After that, we look at the errors of the new model and build yet another model that corrects these errors.

We continue this process until our model becomes accurate enough. The main idea of gradient boosting is that each new model we add takes into account the errors of the previous models and improves the overall accuracy of the model. In our model, we went through 2000 such stages.

Once all models are built, we combine them to get the final model, which can make accurate predictions based on the data we feed it.

So, we used the beloved Catboost from Yandex. Because it works fast and is easy to learn.

Getting Started

Gradient boosting is a machine learning method that allows you to build a predictive model capable of making accurate forecasts based on a large number of different features.It works like this: first, we build a simple model that can make some predictions. Then we look at where this model makes mistakes and add another model that corrects the errors of the first model. After that, we look at the errors of the new model and build yet another model that corrects these errors.

We continue this process until our model becomes accurate enough. The main idea of gradient boosting is that each new model we add takes into account the errors of the previous models and improves the overall accuracy of the model. In our model, we went through 2000 such stages.

Once all models are built, we combine them to get the final model, which can make accurate predictions based on the data we feed it.

Titanic Example

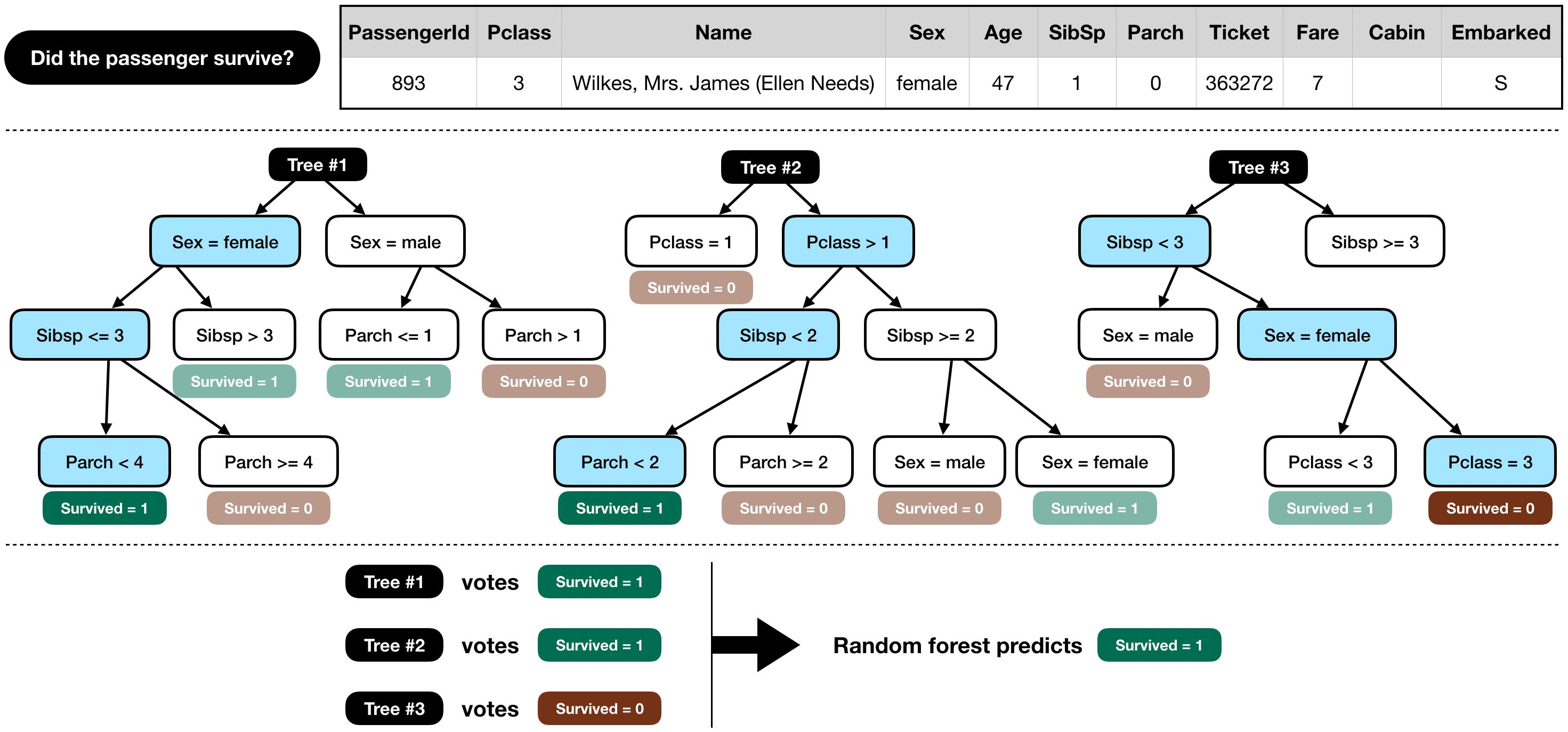

There's a nice illustration that shows whether a person will survive on the Titanic.

Here, three trees take data about the passenger in different sequences and then the ensemble votes on whether the person will survive or not.

Sex - Gender

pclass - Ticket Class. There were 3 levels.

Age - Age

sibsp - Were there spouses aboard

parch - Number of children aboard

After training the model, you can ask which variables influence the result.

Sex - Gender

pclass - Ticket Class. There were 3 levels.

Age - Age

sibsp - Were there spouses aboard

parch - Number of children aboard

After training the model, you can ask which variables influence the result.